GenAI creates outputs based on data it has learnt, and limited information on what the user wants to do with it. Various variants of fine-tuning Large Language Models (LLMs) or Retrieval Augmented Generation (RAG) architectures exist in order to create better outputs from GenAI. Still, Generative AI continues to guess – or predict the output based on inputs and its own training data. It is sometimes not precise, often not completely repeatable, and cannot be fully reliable unless very specific steps are taken to make it so (full disclosure – contact Data-Hat AI if you want solutions to these issues).

Yet, the output from GenAI can be sufficiently accurate for most use cases. It can also help humans think better. It has the ability to bring far more facts to the table, its memory of facts can be much larger than human memory, and it can be a great assistant in helping the human create better outputs. It can also “create” hallucinations when it doesn’t find facts, and so confidently that we believe it to be true facts.

Therefore, GenAI must be used with caution. LLMs must not be used to solve every analytical problem. It has become fashionable to use LLMs, but often – we have to ask – at what cost? Do we need to risk an incorrect answer? Do we need to spend the cost of running an LLM query for something much better solved otherwise?

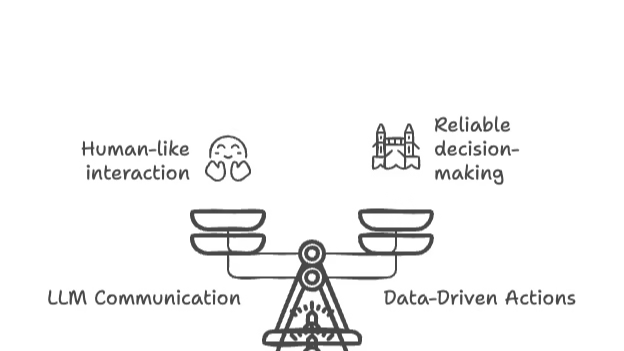

The answer – use LLMs where communicating with the AI as a human – we tend to use it wherever there is an interface, whether voice, video or text. But the actual answers may not be generated by the LLM always. Use real, proven data driven actions where possible.

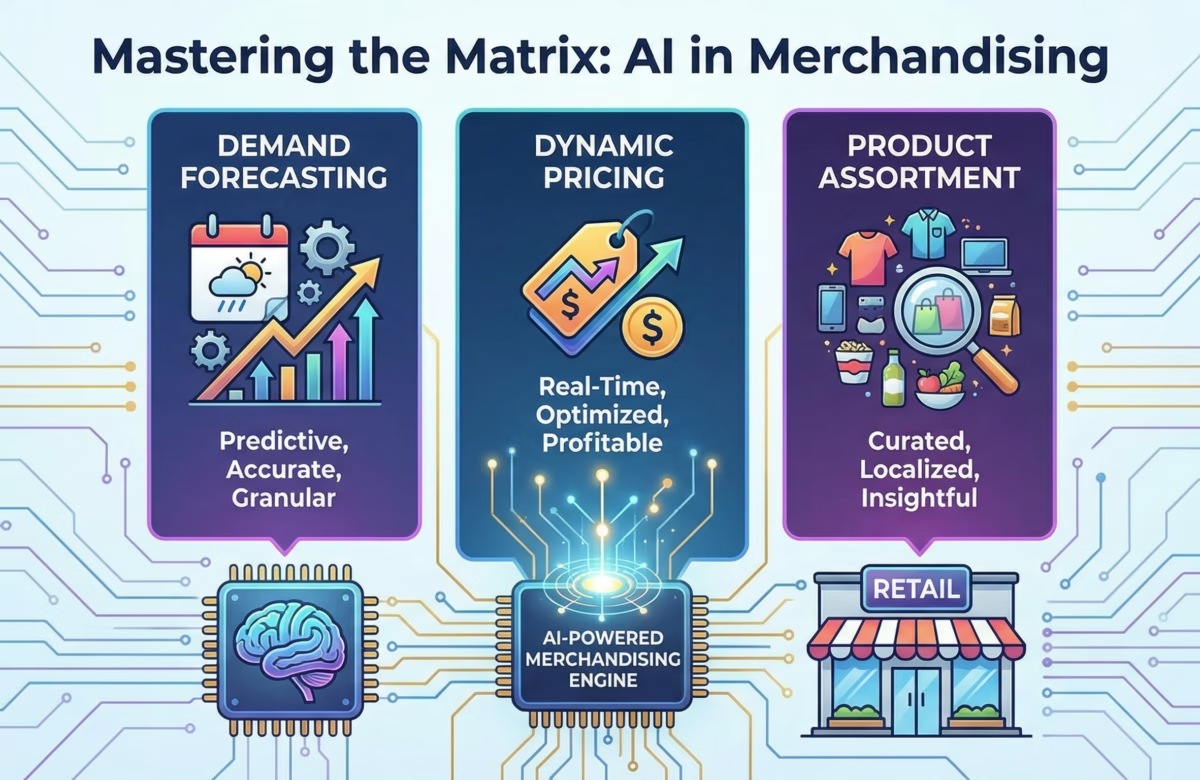

That’s where curated, high-quality data and human-validated insights make all the difference. These are rooted in real-world sources, repeatable processes, and tested methodologies. When decisions carry regulatory, financial, or operational consequences, dependable data and structured analytics outperform GenAI’s generative flexibility. And machine learning models that are created, tested and validated the traditional ways, tend to outperform GenAI for real world applications.

The smart path forward is a balance: we at Data-Hat AIuse GenAI for ideation, language, and scale—but anchor decisions in verified insights and ML models that are specific and purpose built. Think of GenAI not as a substitute for intelligence, but as an amplifier of it—when grounded in data you can trust – which just happens to be the Enterprise differentiator capability as well.

Creating ML models that are trained on Enterprise data, developing answers that combine proprietary domain knowledge with customer insights and business knowledge – interacting with humans via natural language powered by GenAI, this is proving to be the right combination of GenAI and ML.

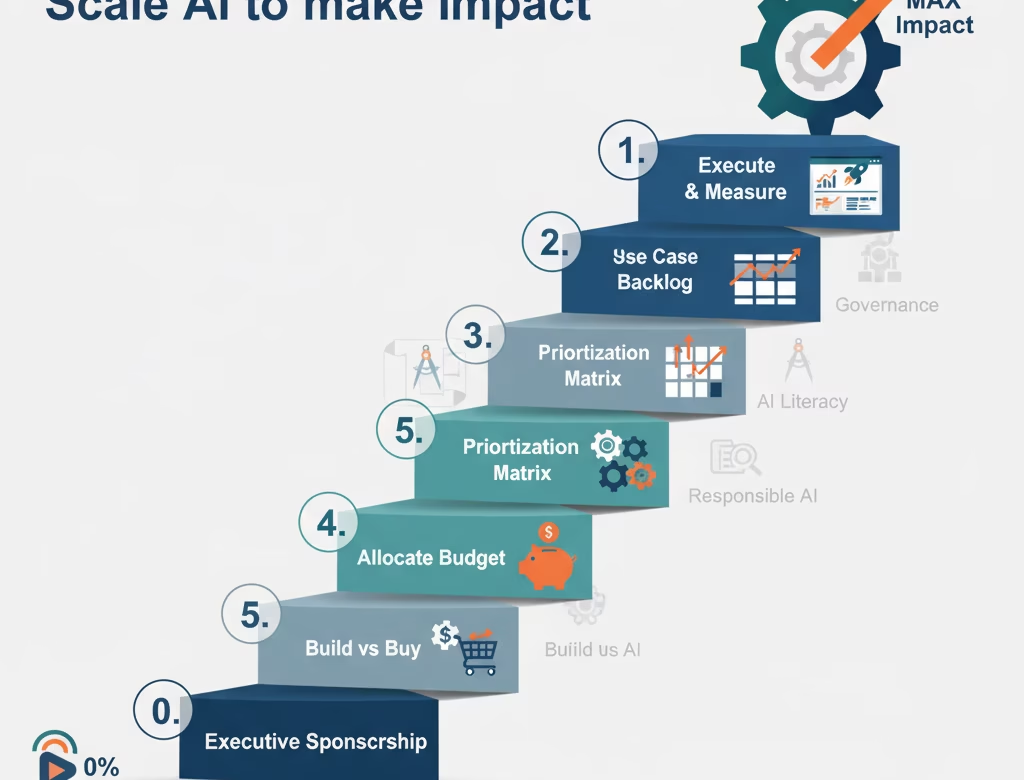

Of course, there are many tools being built for responsible AI, and these are needed, and will be used as appropriate. But the bottom line is that GenAI is fundamentally generative, based on the data it has access to it – and this in itself introduces issues that might never be solved by GenAI. And therefore, we need to use traditional data, ML and insights to solve those parts of the problems.

In the world of enterprise-grade decision-making, reliability matters. Generative AI is powerful, but our data and insights are proven.

The balance and integration of both will provide Responsible, Impactful AI.